If someone throws a ball at you, what do you usually do? - Of course, catch it right away. Is this question very mentally retarded? But in fact, this process is one of the most complicated processes. The actual process is probably as follows: First, the ball enters the human retina, and after some elemental analysis, it is sent to the brain, and the visual cortex will analyze the image more thoroughly. Send it to the remaining cortex, compare it to any object you know, and classify the object and latitude, and finally decide what to do next: raise your hands and pick up the ball (previously predicted its trajectory) .

The above process takes place only in a fraction of a second, almost all of which is completely subconscious, and rarely makes mistakes. Therefore, reshaping human vision is not just a single difficult task, but a series of interlocking processes.

Concept of computer vision technology

Just like other disciplines, a large number of people have studied subjects for many years, but it is difficult to give a strict definition. Pattern recognition is so, the current artificial intelligence is so, and computer vision is also true. Concepts closely related to computer vision are visual perception, visual cognition, image and video understanding. These concepts have some commonalities and are fundamentally different.

Broadly speaking, computer vision is the discipline that "given the machine's natural visual ability." Natural visual ability refers to the visual ability embodied in the biological vision system. A biological natural vision can not be strictly defined, and this generalized visual definition is "all-inclusive", and it is not in line with the research status of computer vision for more than 40 years. Therefore, this "generalized computer vision definition" is impeccable. But it also lacks substantive content, but it is a kind of "circular game definition".

In fact, computer vision is essentially the study of visual perception. Visual perception, according to the definition of Wikipedia, refers to the process of organizing, identifying, and interpreting visual information in environmental expression and understanding. According to this definition, the goal of computer vision is to express and understand the environment. The core problem is to study how to organize the input image information, identify the objects and scenes, and then explain the image content.

Computer Vision (CV) is a discipline that studies how computers can be "looking" like humans. To be more precise, it uses a camera and a computer instead of the human eye to make the computer have the function of segmenting, classifying, identifying, tracking, and discriminating the target.

Computer vision is a simulation of biological vision using computers and related equipment. It is an important part of the field of artificial intelligence. Its research goal is to enable computers to have the ability to recognize three-dimensional environmental information through two-dimensional images. Computer vision is based on image processing technology, signal processing technology, probability and statistical analysis, computational geometry, neural network, machine learning theory and computer information processing technology, and analyzes and processes visual information through computer.

In general, computer vision definitions should include the following three aspects:

1. Construct a clear and meaningful description of the objective objects in the image;

2. Calculating the characteristics of the three-dimensional world from one or more digital images;

3. Make decisions that are useful for objective objects and scenes based on perceived images.

As an emerging discipline, computer vision attempts to establish an artificial intelligence system that acquires "information" from images or multidimensional data by studying related theories and techniques. Computer Vision is a comprehensive discipline that includes computer science and engineering, signal processing, physics, applied mathematics and statistics, neurophysiology and cognitive science, as well as image processing, pattern recognition, projection geometry, and statistical inference. Statistical studies and other disciplines are closely related. In recent years, there has been a strong relationship with computer graphics, three-dimensional performance and other disciplines.

Artificial intelligence and computer vision

Computer vision is closely related to artificial intelligence, but it is also fundamentally different. The purpose of artificial intelligence is to let computers see, hear, and read. The understanding of images, speech and text, these three parts basically constitute our current artificial intelligence. In these areas of artificial intelligence, vision is the core. As you know, vision accounts for 80% of all human sensory input and is the most difficult part of perception. If artificial intelligence is a revolution, then it will be in computer vision, not in other fields.

Artificial intelligence emphasizes reasoning and decision making, but at least computer vision currently mainly stays at the stage of image information expression and object recognition. "Object recognition and scene understanding" also involves the inference and decision making from image features, but is fundamentally different from the reasoning and decision making of artificial intelligence.

The relationship between computer vision and artificial intelligence:

First, it is a very important issue that artificial intelligence needs to solve.

Second, it is a strong driving force for artificial intelligence. Because it has many applications, many technologies are born from computer vision, and then applied to the AI ​​field.

Third, computer vision has a large number of application bases for quantum AI.

The principle of computer vision technology

Computer vision is to replace the visual organs with various imaging systems as input-sensitive means, and the computer replaces the brain to complete the processing and interpretation. The ultimate goal of computer vision is to enable computers to visually observe and understand the world like humans, with the ability to adapt to the environment. Before the ultimate goal is achieved, the medium-term goal of people's efforts is to create a visual system that can perform certain tasks based on a certain degree of intelligence of visual sensitivity and feedback. For example, an important application area of ​​computer vision is the visual navigation of autonomous vehicles. There is no condition to realize a system that can recognize and understand any environment like human beings and complete autonomous navigation. Therefore, the research goal of people's efforts is to realize a visual aid driving system that has road tracking capability on the highway and can avoid collision with the vehicle in front.

The point to be pointed out here is that the computer replaces the human brain in the computer vision system, but it does not mean that the computer must complete the processing of visual information in a human visual way. Computer vision can and should process visual information based on the characteristics of the computer system. However, the human visual system is by far the most powerful and perfect visual system known to humans. The research on human visual processing mechanism will provide inspiration and guidance for computer vision research. Therefore, using computer information processing methods to study the mechanism of human vision and establishing the computational theory of human vision is also a very important and interesting research field.

In-depth research in this area began in the 1950s and took three directions—replicating the human eye; replicating the visual cortex; and replicating the rest of the brain.

Copy the human eye - let the computer "go to see"

The area where the most effective results are currently in the field of “replicating the human eyeâ€. In the past few decades, scientists have built sensors and image processors that match the human eye and have even surpassed it to some extent. With powerful, optically perfect lenses and semiconductor pixels manufactured at the nano level, the precision and acuity of modern cameras has reached an alarming level. They can also take thousands of images per second and measure distances with great precision.

But the problem is that although we have been able to achieve very high fidelity at the output, in many ways these devices are no better than the 19th century pin-camera cameras: they record only the distribution of photons in the corresponding direction. And even the most powerful camera sensor can't "recognize" a ball, and the public opinion grabs it.

In other words, hardware is quite limited without software. Therefore, software in this field is the more difficult problem to be solved. But now the advanced technology of the camera does provide a rich and flexible platform for this software.

Copy the visual cortex - let the computer "describe"

We must know that the human brain is basically a "seeing" movement through consciousness. Compared to other tasks, quite a part of the brain is dedicated to "seeing", and this feat is done by the cell itself - billions of cells work together from noisy, irregular retina The mode is extracted from the signal.

If there is a difference along a line at a particular angle, or if there is a rapid movement in a certain direction, then the neuron group will be excited. Higher-level networks classify these patterns into meta-patterns: it is a ring that moves upwards. At the same time, another network is also formed: this time is a white ring with a red line. And there is another mode that will grow in size. From these rough but complementary descriptions, specific images are generated.

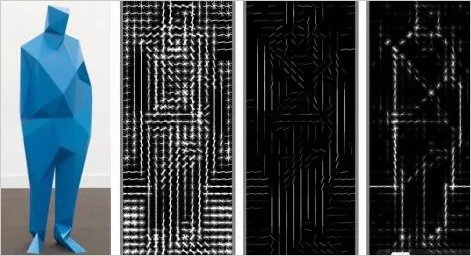

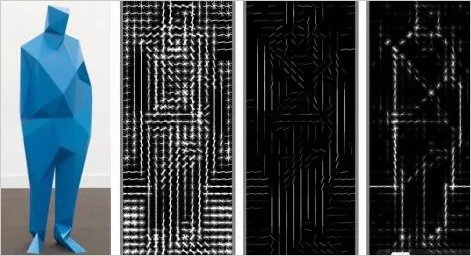

Using a technique similar to the human brain vision area to locate the edges and other features of the object, resulting in a "direction gradient histogram"

Since these networks were once considered "unpredictable and complex," in the early days of computer vision research, other approaches were adopted: the "top-down reasoning" model—such as a book that looked like " So, then you should pay attention to the pattern similar to "this". And a car looks like "this way" and it is "this way".

In some controlled situations, it is true that this process can be done for a few objects, but if you want to describe every object around you, including all angles, lighting changes, motion and hundreds of other elements, even if you are oh The identification of the baby level of the language also requires huge data that is unimaginable.

If you don't use "top-down" and switch to the "bottom-up" approach, which simulates the process in the brain, it looks better. The computer can perform a series of pictures on multiple images. Convert to find the edge of the object and find objects, angles, and motion on the image. Just like the human brain, by viewing various graphics to a computer, the computer uses a lot of calculations and statistics to try to match the "seen" shape to what was previously identified in the training.

What scientists are studying is to enable smartphones and other devices to understand and quickly identify objects in the camera's field of view. As shown above, the objects in the street view are labeled with text labels describing the objects, and the processor that completes this process is 120 times faster than the traditional shou machine.

With the advancement of parallel computing in recent years, related barriers have gradually been removed. There has been an explosive growth in mimicking the research and application of brain-like functions. The process of pattern recognition is gaining an order of magnitude acceleration, and we are making more progress every day.

Copy the rest of the brain - let the computer "understand"

Of course, it is not enough to "recognize" and "describe". A system recognizes Apple, including in any situation, any angle, any state of motion, even being bitten, and so on. But it still can't recognize an orange. And it can't even tell people: Is it apple? Can you eat? What is the size? Or specific use.

As I said before, without software, the hardware is very limited. But the problem now is that even with excellent hardware and software, and no excellent operating system, it is "always."

For people, the rest of the brain is made up of these, including long- and short-term memories, other sensory inputs, attention and cognition, and billions of knowledge gained from trillion-level interactions in the world. The way we are hard to understand is written into the interconnected nerves. And to copy it is more complicated than anything we have ever encountered.

Image processing method of computer vision technology

In computer vision systems, the processing technology of visual information mainly relies on image processing methods, including image enhancement, data encoding and transmission, smoothing, edge sharpening, segmentation, feature extraction, image recognition and understanding. After these processes, the quality of the output image is improved to a considerable extent, which not only improves the visual effect of the image, but also facilitates the analysis, processing and recognition of the image by the computer.

Image enhancement

Image enhancements are used to adjust the contrast of the image, highlight important details in the image, and improve visual quality. Image enhancement is typically performed using grayscale histogram modification techniques. The gray histogram of an image is a statistical chart showing the gray distribution of an image, closely linked to the contrast. Through the shape of the gray histogram, the sharpness and black-and-white contrast of the image can be judged. If the histogram effect of obtaining an image is not ideal, it can be appropriately modified by the histogram equalization processing technique, that is, the pixel gray scale in a known gray probability distribution image is transformed into some kind, so that it becomes A new image with a uniform grayscale probability distribution is used to make the image clear.

Image smoothing

The smoothing processing technique of the image, that is, the denoising processing of the image, is mainly to remove the image distortion caused by the imaging device and the environment during the actual imaging process, and extract useful information. It is well known that in the process of formation, transmission, reception and processing, the actual obtained ceramic image inevitably has external interference and internal interference, such as the sensitivity of the sensitivity of the sensitive components in the photoelectric conversion process, the quantization noise of the digitization process, and the transmission. Errors in the process, as well as human factors, etc., can degrade the image. Therefore, removing noise and restoring the original image is an important part of image processing.

Image encoding and transmission

The amount of data of a digital image is quite large, and the amount of data of a digital image of 512.512 pixels is 256 Kbytes. If it is assumed that 25 frames of images are transmitted per second, the channel rate of transmission is 52.4 Mbits/sec. A high channel rate means a high investment, which means an increase in the difficulty of the popularization. It is very important to compress the image data during transmission. The compression of data is mainly accomplished by the compilation and transformation of image data. Image data coding generally uses predictive coding. That is, the spatial variation law and sequence change law of image data are represented by a prediction formula. If it is known, the pixel values ​​are predicted by the previous neighboring pixel values ​​of a certain pixel. The method can compress the data of one image into a few dozens of special transmissions, and then transform back at the receiving end.

Edge sharpening

Image edge sharpening is mainly to enhance the contour edges and details in the image to form a complete object boundary. The purpose of separating the object from the image or detecting the area representing the same object surface is achieved. It is a fundamental problem in early visual theory and algorithms. It is also one of the important factors in the success of the medium and late visual.

Image segmentation

Image segmentation is the division of an image into parts, each part corresponding to the surface of an object. When segmentation is performed, the grayscale or texture of each part conforms to a certain uniform measure. One essence is to classify pixels. The classification is based on the gray value, color, spectral characteristics, spatial characteristics or texture characteristics of the pixel. Image segmentation is one of the basic methods of image processing technology, such as chromosome classification, scene understanding system, machine vision and so on. There are two main methods for image segmentation: one is the gray value segmentation method in view of the measurement house. It is based on the image gray histogram to determine the image spatial domain pixel clustering. The second is the spatial domain regional growth segmentation method. It is a segmentation region for pixel connected sets with similar properties in a certain sense (such as gray level, organization, gradient, etc.). This method has a good segmentation effect, but the disadvantage is that the operation is complicated and the processing speed is slow.

Data driven segmentation

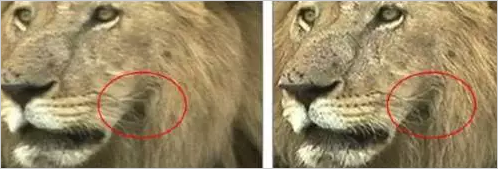

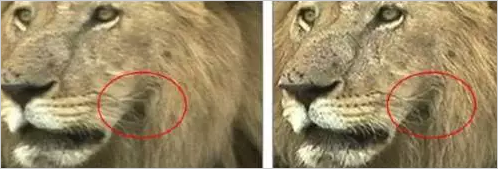

Common data-driven segmentation includes edge detection based segmentation, region-based segmentation, and edge-to-region segmentation. For the edge detection based segmentation, the basic idea is to detect the edge points in the image first, and then connect the contours according to a certain strategy to form the segmentation region. The difficulty lies in the contradiction between anti-noise performance and detection accuracy in edge detection. If the detection accuracy is improved, the false edge generated by the noise will lead to an unreasonable contour; if the anti-noise performance is improved, the contour miss detection and position deviation will occur. To this end, various multi-scale edge detection methods have been proposed, and a multi-scale edge information combination scheme is designed according to practical problems, so as to better balance the anti-noise performance and detection accuracy.

The basic idea of ​​region-based segmentation is to divide the image space into different regions according to the characteristics of the image data. Commonly used features include: grayscale or color features directly from the original image; features derived from the original grayscale or color value transformation. Methods include threshold method, region growing method, clustering method, and relaxation method.

Edge detection can obtain the local variation intensity of grayscale or color values, and region segmentation can detect the similarity and uniformity of features. Combine the two, avoid the over-segmentation of the area by the limitation of the edge points; at the same time, supplement the missing edge by the area division to make the contour more complete. For example, edge detection and connection are performed first, then the features of adjacent regions (gray mean, variance) are compared, and if they are close, they are merged; edge detection and region growth are performed on the original image, and the edge map and the region segment map are obtained, and then According to certain criteria, the final segmentation result is obtained.

Model driven segmentation

Common model-driven segmentation includes based on the Snakes model, the combinatorial optimization model, the target geometry, and the statistical model. The Snakes model is used to describe the dynamic contour of the segmentation target. Because its energy function uses integral operation, it has better anti-noise and is not sensitive to local blurring of the target, so it has wide applicability. However, this segmentation method tends to converge to the local Zui, so the initial contour should be as close as possible to the true contour.

In recent years, the research on general segmentation methods tends to regard segmentation as a combinatorial optimization problem, and uses a series of optimization strategies to complete the image segmentation task. The main idea is to define an optimization objective function according to the specific task in addition to the constraints defined by the segmentation. The solution to the segmentation is the global solution of the objective function under the constraint condition. The segmentation problem is dealt with from the perspective of combinatorial optimization. It mainly uses an objective function to comprehensively represent the various requirements and constraints of segmentation, and transforms the segmentation into an optimal solution of the objective function. Since the objective function is usually a multivariate function, a random optimization method can be employed.

Segmentation based on target geometry and statistical models is a method of integrating target segmentation and recognition, often referred to as target detection or extraction. The basic idea is to represent the geometric and statistical knowledge of the target as a model, to transform the segmentation and recognition into matching or supervised classification. Commonly used models are templates, feature vector models, connection-based models, and so on. This segmentation method can perform some or all of the identification tasks at the same time, and has high efficiency. However, due to changes in imaging conditions, the targets in the actual image tend to be different from the model, and it is necessary to face the contradiction between false detection and missed detection. The search step in matching is also quite time consuming.

Image recognition

The image recognition process can actually be regarded as a marking process, which uses the recognition algorithm to identify the divided objects in the scene. Give the object a specific mark, which is a task that the computer vision system must complete. . According to the network image recognition, from easy to difficult, the town is divided into i-type problems. In the first type of identification problem, the pixels in the image express a certain information of an object. In the second type of problem, the object to be identified is a tangible whole. The two-dimensional image information is sufficient to identify the object, such as text recognition, some three-dimensional body recognition with a stable visible surface, and the like. The third type of problem is to obtain a three-dimensional representation of the measured object from the input two-dimensional map, feature map, 2x5 dimensional map, and so on. Here is the question of how to extract the hidden three-dimensional information. It is a hot topic in this research.

The current methods for image recognition are mainly divided into decision theory and structural methods. The basis of the decision theory method is the decision function, which is used to classify and identify the pattern vector. It is based on the timing description (such as statistical texture): the core of the structure method is to decompose the object into a 'mode or mode primitive, and different The object structure has different primitive strings (or strings), and the encoding boundary is obtained by using a given pattern primitive for the unknown object to obtain a string, and its genus is determined according to the string. This is a method that relies on symbols to describe the relationship between the objects being measured.

Application field of computer vision

The application fields of computer vision mainly include the interpretation of photos and video materials such as aerial photos, satellite photos, video clips, precision guidance, mobile robot visual navigation, medical aided diagnosis, hand-eye system of industrial robots, map drawing, and object shape analysis. And identification and intelligent human machine interface.

One of the purposes of early digital image processing is to improve the quality of photos by using digital technology, and to assist in the reading and classification of aerial photos and satellite photos. Due to the large number of photographs that need to be interpreted, it is hoped that there will be an automatic visual system for interpretation. In this context, many aerial photo and satellite photo interpretation systems and methods have been produced. A further application of automatic interpretation is to directly determine the nature of the target, perform real-time automatic classification, and combine with the guidance system. At present, the commonly used guidance methods include laser guidance, television guidance and image guidance. In the dao bomb system, inertial guidance is often combined with image guidance, and images are used for precise terminal guidance.

The hand-eye system of industrial robots is one of the most successful fields of computer vision applications. Because many factors in the industrial field, such as lighting conditions and imaging directions, are controllable, the problem is greatly simplified, which is conducive to the actual system. Unlike industrial robots, for mobile robots, because of its ability to act, it is necessary to solve the behavior planning problem, that is, the understanding of the environment. With the development of mobile robots, more and more visual capabilities are required, including road tracking, avoidance obstacles, and specific target recognition. At present, the research on mobile robot vision system is still in the experimental stage, and most of them use remote control and far vision methods.

Image processing techniques used in medicine generally include compression, storage, transmission, and automatic/aided classification interpretation, and can also be used as an auxiliary training means for doctors. Work related to computer vision includes classification, interpretation, and reconstruction of fast three-dimensional structures. Mapping has long been a labor, material and time work. In the past, manual measurement was carried out. Nowadays, more methods are used to map the three-dimensional shape in aerial survey and stereo vision, which greatly improves the efficiency of map drawing. At the same time, the three-dimensional shape analysis and recognition of general objects has always been an important research goal of computer vision, and has made some progress in the feature extraction, representation, knowledge storage, retrieval and matching recognition of the scenes, which constitute some for three-dimensional The system of scene analysis.

In recent years, biometrics-based identification techniques have received extensive attention, focusing on features such as faces, irises, fingerprints, and sounds, most of which are related to visual information. Another important application closely related to biometrics is the use of intelligent human interface. Now the computer and human communication is mechanical, the computer can not identify the user's true identity, in addition to the keyboard, mouse, other input means is not mature. Using computer vision technology, the computer can detect whether the user exists, identify the user, and identify the user's body (such as nodding, shaking his head). In addition, this kind of human-computer interaction can also be extended to all occasions that require human-computer interaction, such as entrance security control, inspection and release of transit personnel.

Butt Weld Tee

Why is it called butt weld tee? Because there are three outlets. All the outside diameters are same, it should be equal tee. For those tee fittings whose run pipes are larger than branch pipe, it is

reducing tee. In addition, there are Split Tee, barred tee, and lateral tee 45degree.

Butt weld tee

Specifications for butt weld tee:

1. Size:1/2``-24``(Seamless Pipe fittings) 26``-96``

2. Wall thickness:SCH5-XXS

3. Material:carbon steel A234 WPB, alloy steel, stainless steel

4. Standard:ASME B16.9, ASME B16.25, MSS SP75, EN10253 etc.

ANSI B16.9 pipe tee

If customer have special requirements on sizes of each part, these fittings shall be customized.Butt weld tees are widely in used many fields, like architecture, electric power, petroleum,chemical industry etc.

Butt Weld Tee

Split Tee,Side Outlet Tee,Galvanized Pipe Tee,Lateral Tee 45 Degree

CANGZHOU HENGJIA PIPELINE CO.,LTD , https://www.hj-pipeline.com